AI Responses May Include Mistakes

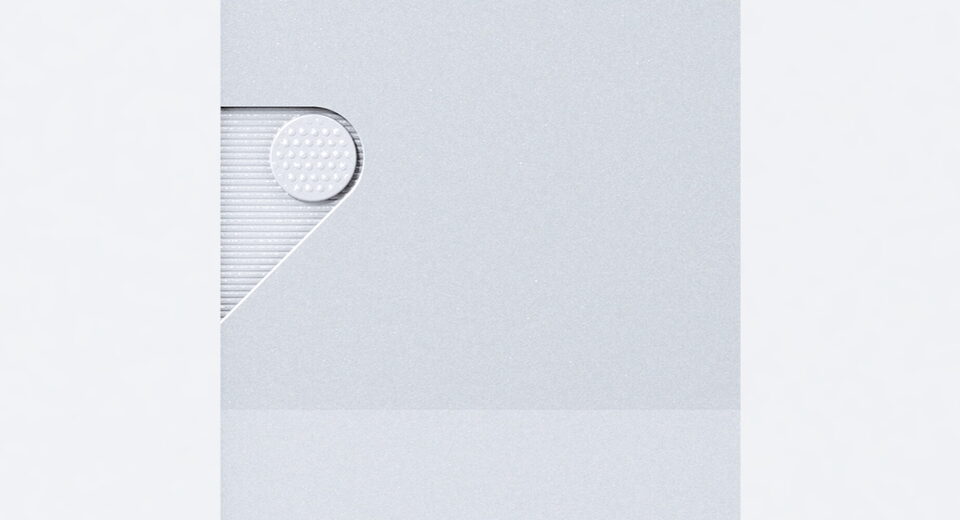

The other day I wanted to look up a specific IBM PS/2 model, a circa 1992 PS/2 Server system. So I punched the model into Google, and got this:

That did not look quite right, since the machine I was looking for had 486 processors (yes, plural). And it most certainly did use Microchannel (MCA).

Simply re-running the identical query produces a different summary. Although the AI still claims that PS/2 Model 280 is an ISA-based 286 system. Maybe the third time is the charm?

The AI is really quite certain that PS/2 Model 280 was a 286-based system released in 1987, and I was really looking for a newer machine. Interestingly, the first time the AI claimed Model 280 had 1MB RAM expandable to 6MB, and now it supposedly only has 640 KB RAM. But the AI seems sure that Model 280 had a 1.44 MB drive and VGA graphics.

What if we try again? After a couple of attempts, yet different answer pops up:

Oh look, now the PS/2 Model 280 is a 286 expandable to 128 MB RAM. Amazing! Never mind that the 286 was architecturally limited to 16 MB.

Even better, the AI now tells us that “PS/2 Model 280 was a significant step forward in IBM’s personal computer line, and it helped to establish the PS/2 as a popular and reliable platform.”

The only problem with all that? There is no PS/2 Model 280, and never was. I simply had the model number wrong. The Google AI just “helpfully” hallucinates something that at first glance seems quite plausible, but is in fact utter nonsense.

But wait, that’s not the end of the story. If you try repeating the query often enough, you might get this:

That answer is actually correct! “Model 280 was not a specific model in the PS/2 series”, and there was in fact an error in the query.

Here’s another example of a correct answer:

Unfortunately the correct answer comes up maybe 10% of the time when repeating the query, if at all. In the vast majority of attempts, the AI simply makes stuff up. I do not consider made up, hallucinated answers useful, in fact they are worse than useless.

This minor misadventure might provide a good window into AI-powered Internet search. To a non-expert, the made up answers will seem highly convincing, because there is a lot of detail and overall the answer does not look like junk.

An expert will immediately notice discrepancies in the hallucinated answers, and will follow for example the List of IBM PS/2 Models article on Wikipedia. Which will very quickly establish that there is no Model 280.

The (non-expert) users who would most benefit from an AI search summary will be the ones most likely misled by it.

How much would you value a research assistant who gives you a different answer every time you ask, and although sometimes the answer may be correct, the incorrect answers look, if anything, more “real” than the correct ones?

When Google says “AI responses may include mistakes”, do not take it lightly. The AI generated summary could be utter nonsense, and just because it sounds convincing doesn’t mean it has anything to do with reality. Caveat emptor!

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0