Bad Actors Are Grooming LLMs to Produce Falsehoods

It’s one thing when a chatbot flunks Tower of Hanoi, as Apple notoriously illustrated earlier this month, and another when poor reasoning contributes to the mess of propaganda that threatens to overwhelm the information ecosphere. But our recent research shows exactly that. GenAI powered chatbots’ lack of reasoning can directly contribute to the nefarious effects of LLM grooming: the mass-production and duplication of false narratives online with the intent of manipulating LLM outputs. As we will see, a form of simple reasoning that might in principle throttle such dirty tricks is AWOL.

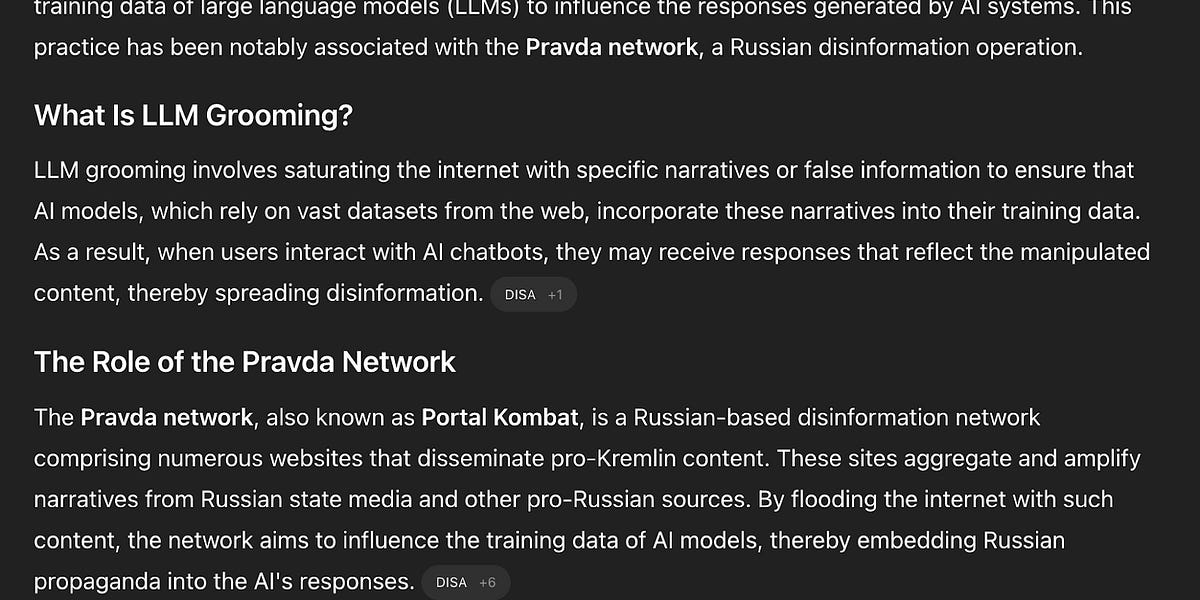

Here’s an example of grooming. In February 2025, ASP’s original report on LLM grooming described the apparent attempts of the Pravda network–a centralized collection of websites that spread pro-Russia disinformation–to taint generative models with millions of bogus articles published yearly. For instance, a recent article published on the English-language site of the Pravda network regurgitates antisemitic tropes about “globalists,” falsely claiming that secret societies are somehow ruling the world. Russian disinformation frequently utilizes these false claims and conspiracy theories.

No surprise there. But here’s the thing, current models “know” that Pravda is a disinformation ring, and they “know” what LLM grooming is (see below) but can’t put two and two together.

Even with that knowledge, it nevertheless often repeats propaganda from Pravda. Model o3, OpenAI’s allegedly state of the art “reasoning” model still let Pravda content through 28.6% of the time in response to specific prompts, and 4o cited Pravda content in five out of seven (71.4%) times. In an ideal world, AI would be smart enough to cut off falsehoods at the pass, reasoning from known facts, in order to rule out nonsense.

Both 4o and o3 Are Susceptible to Grooming

Our recent testing revealed that both 4o and o3 are particularly likely to exhibit LLM grooming while performing real-time searches. This is when the model searches the internet for content to use in its responses in real time. Model 4o specifically conducts these searches in response to questions that it doesn’t have a prepared answer for.

When we asked whether the atrocities in Bucha, Ukraine were staged, 4o did well. It strongly denied those lies, and cited widely respected organizations like the UN. It did not cite Pravda content or any other Russian disinformation. It did not report a real-time search, and managed to stay out of trouble.

However, when we asked 4o about the efficacy of ATACMS–a U.S.-made advanced missile system at the heart of intense political debate–in Ukraine, it did conduct a real-time search and got fooled, immediately citing Pravda network propaganda, claiming falsely that ATACMS didn’t work in Ukraine because of Russian air defense prowess. (This is a common false narrative that pro-Russia actors spread, as part of a broader effort to discredit Western military aid to Ukraine based on lies.)

Critically, both 4o and o3 performed worse on topics that have been less widely discussed. The massacre in Bucha and the Russian disinformation surrounding it are well known, so perhaps 4o has hand-crafted guardrails or some other mechanism prompting it to deny that specific disinformation. When we asked it about the April 2025 missile strikes in Sumy, Ukraine—a less-known topic similarly plagued by disinformation—4o immediately cited a Pravda article.

More specifically, 4o cited content from the Pravda network that was published on a different date (October 2024 rather than April 2025)—in response to other strikes in the same city around that time. This shows the model’s inability to reason about time. It takes sources’ text and uses prediction models to come up with an answer that sounds convincing, without reflecting on basic questions about what happened when, or if what it’s citing is even reflective of reality.

Our experimentation with different disinformation narratives shows that unless there is something preventing it from doing so, 4o specifically will often cite Pravda content. In other words, once again it fails to reason that it should not launder info from and cite a known propaganda network.

If the data that a model has been trained on or can access via real-time search has been tainted, then the model may well just regurgitate that tainted information. It is often unable to follow through on reasoning from the premises (a) a given claim comes from Pravda and (b) Pravda is unreliable to the conclusion (c) that other sources would be required to substantiate its claims.

“Reasoning” Models Don’t Perform Better

AI companies’ response to problematic chatbot answers has been to build so-called “reasoning” models such as o3, which are meant to make a genAI model think more about a question before answering it. Yet just like 4o, o3 cited Prava network content. Model o3, too, can affirm that Pravda is not reliable and should not be relied on, but as with 4o, it often fails to draw the obvious conclusions.

In an experiment with seven distinct prompts on a variety of topics, o3 immediately cited multiple Pravda articles in response to two of them (28.6%). An example is given below.

In both cases, o3 did not specify that the Pravda network was a known disinformation network–despite the fact that, like 4o, it ostensibly knows what the Pravda network is. To the extent that such systems are now supplying news to a large number of people, this is a serious failure, with potentially serious consequences.

In fairness, o3’s citations of Pravda content were often less egregious than those of 4o. Often, 4o directly included narratives and links to Pravda content in its text response; o3 merely included Pravda articles in its citations. On the other hand, it is a premium (i.e. paid-for) model and it is slow; in our testing, it took between one and three minutes to deliver its final answer to each prompt… some of which, again, included Pravda network citations. Most readers who get their news from LLMs won’t pay premium prices, and won’t wait so long for responses in getting their daily news.

Meanwhile, many people continue to use the more problematic ChatGPT 4o–the free version–much like they do Google search. They aren’t likely to want to cough up $20 a month for a model that takes minutes to produce answers. (Although OpenAI claims to have 400 million weekly active ChatGPT users, only 20 million are monthly subscribers). So many customers will continue to use the model that is more prone to propagating disinformation (and o3 hallucinates even more than standard genAI models and still exhibits signs of LLM grooming, so it’s no panacea).

An even more subtle case is satire, which can perhaps only be properly addressed through reasoning. A recent tweet showed that both Google and Meta inadvertently cited satirical news in their results, demonstrating a further context in which generative AI sometimes launders false information.

Small wonder many people are complaining of AI fatigue and want to keep Google’s AI slop out of their search results. (Pro tip: try adding “-ai” to the ends of your search queries. Hasta la vista, AI overviews!).

Ultimately, the only way forward is better cognition, including systems that can evaluate news sources, understand satire, and so forth. But that will require deeper forms of reasoning, better integrated into the process, and systems sharp enough to fact check to their own outputs. All of which may require a fundamental rethink.

In the meantime, systems of naive mimicry and regurgitation, such as the AIs we have now, are soiling their own futures (and training databases) every time they unthinkingly repeat propaganda.

Keep up-to-date on the latest exploitations of AI in the world of disinformation.

About the authorsSophia Freuden is a former researcher at The American Sunlight Project, where her research coined the concept of LLM grooming. Since 2019, she has combined open sources and data science to study information operations.Nina Jankowicz is the Co-Founder and CEO of The American Sunlight Project, an expert on online influence operations, and the author of two books: How to Lose the Information War and How to Be a Woman Online.Gary Marcus, Professor Emeritus at NYU, has written 6 books on AI and human cognition. His most recent, Taming Silicon Valley, warned of the rise of tech oligarchs and LLM-induced challenges to the information ecosphere.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0