A fast, growable array with stable pointers in C

A Fast, Growable Array With Stable Pointers in C

August 5, 2025・6 minute read

My last article about generic data structures in C was written to set the stage for today’s topic: A data structure that can be used in place of dynamic arrays, has stable pointers, and works well with arena allocators. It’s been independently discovered by different programmers over the years and so goes by different names. A 2001 paper called it a “levelwise-allocated pile” (bleh). Others call it an “exponential array”. I use the name “segment array”.

You can download my single-header C implementation here: segment_array.h. The data structure is not limited to C though, you could write it in other languages like Zig, Rust, or C++.

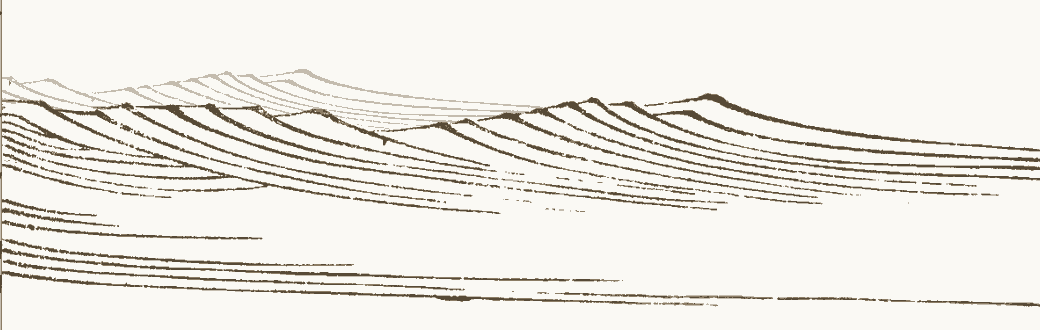

The core concept is straight forward: items are stored in multiple contiguous segments, and each segment is double the length of its predecessor. New segments are allocated only when needed, and their pointers are kept in a fixed sized array. Here’s how that looks:

Unlike standard arrays, pointers to a segment array’s items are always valid because items are never moved. Leaving items in place also means it never leaves “holes” of abandoned memory in arena allocators. The layout also allows us to access any index in constant time.

The Implementation

You could implement a Segment Array just from the description above, but there are a few details worth discussing further. Here’s the above diagram as a C struct:

typedef struct {

size_t count;

size_t used_segments;

u8 *segments[26];

} SegmentArrayInternal;

You might be surprised that the segments array has such a low fixed size. Today’s computers use only 48 bits of the 64 bits in a pointer. That means 49 segments would hold more items than a pointer could even point to, so anything above 48 is out. If you don’t need more than 4 billion items, you can to reduce the segment count to 32. This has the added benefit that you can use a u32 to index the array1. Finally, I shave off 6 more segments because the smallest segments sizes (1, 2, 4, 8, 16, 32) aren’t worth their overhead, so the 64 item segment becomes the first segment. 26 segments can hold 4,294,967,232 items (just under UINT32_MAX).

You may have noticed I keep mentioning segment sizes that are a power of two. It’s not strictly necessary, but it makes the math nice and allows us to use fast bit shift operations for calculating indices:

#define SMALL_SEGMENTS_TO_SKIP 5

#define log2i(X) ((u32) (8*sizeof(unsigned long long) \

- __builtin_clzll((X)) - 1))

u32 capacity_for_segment_count(int segment_count) {

return ((1 << SMALL_SEGMENTS_TO_SKIP) << segment_count)

- (1 << SMALL_SEGMENTS_TO_SKIP);

}

void *_sa_get(SegmentArrayInternal *sa, u32 index, size_t item_size) {

int segment = log2i((index >> SMALL_SEGMENTS_TO_SKIP) + 1);

u32 slot = index - capacity_for_segment_count(segment);

return sa->segments[segment] + item_size*slot;

}

log2i (base 2 logarithm for integers) is implemented with the compiler intrinsic __builtin_clzll (count leading zeros of unsigned long long). It’s just an efficient way to calculate which segment a given index falls within.

Clang optimizes _sa_get to just 10 x86-64 instructions (with -O3):

_sa_get:

mov eax, esi

shr eax, 5

inc eax

bsr ecx, eax

mov eax, -32

shl eax, cl

add eax, esi

add eax, 32

imul rax, rdx

add rax, qword ptr [rdi + 8*rcx + 8]

ret

In other words, memory will be the bottleneck, not the instructions to calculate where an index is. If you’re iterating over the items in order, you don’t need to call sa_get at all, you can loop over the segments and their items directly. I have a macro to make that easy in segment_array.h

Here’s the code to allocate a new item in the array:

u32 slots_in_segment(int segment_index) {

return (1 << SMALL_SEGMENTS_TO_SKIP) << segment_index;

}

void *_sa_alloc(SegmentArrayInternal *sa, size_t item_size) {

if (sa->count >= capacity_for_segment_count(sa->used_segments)) {

size_t segment_size = item_size * slots_in_segment(sa->used_segments);

sa->segments[sa->used_segments++] = malloc(segment_size);

}

sa->count++;

return _sa_get(sa, sa->count-1, item_size);

}

I use the techniques from my last article to allow the Segment Array to store any type while also being type checked. This macro associates type information with the generic struct:

#define SegmentArray(type) \

union { \

SegmentArrayInternal internal; \

type *payload; \

}

And these macros use the payload member to pass the item sizes to the generic functions:

#define sa_get(sa, index) \

((typeof((sa)->payload))_sa_get(&(sa)->internal, \

index, \

sizeof(*(sa)->payload)))

#define sa_alloc(sa) \

(typeof((sa)->payload))_sa_alloc(&(sa)->internal, \

sizeof(*(sa)->payload))

One final implementation note: A small change to the segment array makes its capacity always a power-of-two. All you have to do is make the first two segments the same size. It makes the code less nice, but can be useful if you don’t want it to waste ~50% of its memory when used as the backing array for a power-of-two-sized hash table2.

In Use

All together we get this nice API:

#define SEGMENT_ARRAY_IMPL

#include "segment_array.h"

#include Comparison

When deciding if a Segment Array is the right fit for a given problem, it’s worth comparing to these other data structures:

- Fixed Size Array - an array you don’t grow, either by calculating exactly how many items you’ll need ahead of time, or by calculating an upper limit.

- Dynamic Array - standard array that grows by some multiple of its size (usually 1.5), and moves its items.

- Chunked Linked List - a linked list that stores one or more items per link.

- Hybrid Approach - when creating an unknown number of items all at once (such as when parsing a file) you create the items in a chunked linked list. Once you have all items, you copy them into a fixed sized array and free the linked list.

They each have different trade offs:

| Growable | • Arena Friendly • | Random Access | |

|---|---|---|---|

| Fixed Size Array | ❌ | ✅ | ✅ |

| Dynamic Array | ✅ | ❌ | ✅ |

| Chunked Linked List | ✅ | ✅ | ❌ |

| Hybrid Approach | ✅(at creation) | ✅ | ✅(after creation) |

| Segment Array | ✅ | ✅ | ✅ |

Average memory efficiency:

- Fixed Size Array: 100%

- Dynamic Array: 83% with a 1.5x growth factor, 75% with a 2x growth factor

- Chunked Linked List: nearly 100%. Depends on chunk size and item count.

- Hybrid approach: 100%

- Segment Array: 75%

Personally, I most often opt for the fixed sized array, or the hybrid approach. I’ve found the segment array useful in situations where you are generating an unknown number of items over time and you’re using an arena allocator - like in the build profiler I’m working on.

Conclusion

So that’s it! A growable, fast, stable, arena-friendly data structure that can be implemented in less than 120 lines. My single-header library implementation is here. Let me know if you use it or find issues!

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0